Risk-Parity investment strategies focus on greater allocation to less risky investment to achieve a given return goal. Leverage is used to “supercharge” the investment in the least risky asset class. Conceptually, a great plan.

In practice, most risk-parity strategies use a long bond strategy. Levering up long bonds via the use of futures and derivatives. During the 10-year bond bull market and over the past 5-years with easy money, this has been a great tactic and led to excess returns.

The question for risk-parity investors is does this strategy hold going forward? One school of thought says that coordinated Central Bank cheap money has inflated prices to artificial levels for both bonds and stocks. Others say that while the bond market has peaked, the equity and commodity markets still have legs to climb higher. No one really knows. However, there are some factors that should lead risk parity investors to delve further into exposure analysis. The main factor is that risk parity strategies have never been tested in a bear market for bonds.

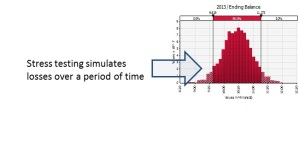

To this end, I would recommend a r-Dex analysis of any risk-parity strategy. Never heard of r-Dex? r-Dex or Risk Downside Exposure is similar to Value@Risk in that it measures downside risk to the expected value of a portfolio. r-Dex measures this risk by stress-testing the “left tail” of portfolio returns. The value of r-Dex is that it allows you to test for highly-correlated negative movements across all asset classes (dependent testing) or simulate a “break” in a single asset class (independent testing).

From a risk-parity standpoint, r-Dex would allow you to simulate risk of a simultaneous break in both equity, bond, and commodity markets. Given that almost everyone agrees that the bond market has minimal upside potential, at a minimum, a r-Dex Independent test could be run only on fixed income assets.

Either way, using r-Dex would allow you to evaluate the Risk in Risk Parity.